- Messaging Sherpa

- Posts

- Inside my message testing lab 🧪

Inside my message testing lab 🧪

How I help SaaS marketers and PMMs track and test their messaging

how I feel when product marketers are letting their messaging run blind

If there was one key word to summarize 8 years that I’ve been working in marketing, I would choose “experimentation” ,

→ I had to experiment different roles under this function

→ I had to experiment with different growth channels to generate leads

→ I had to experiment with hundreds of outbound campaigns to book meetings

→ I had to experiment and iterate my offer over and over since I’ve been solo

But even if my whole career was full of tests and experiences, it helped me progress faster that I could have hope, especially because I took calculated risks and always tried to remove my opinion from the situation (at least at first!)

Lack of experimentation and testing creates random results: you can’t properly achieve what you’re not planning for. The same happens with your product messaging

Too many SaaS marketers have to settle for average or even mediocre messaging, because their ownership and influence is split by opinionated stakeholders.

When I asked 65 product marketers about their messaging showed me that the lack of testing and tracking messaging performance, is making product marketers stuck with bad messaging and lack of results/usability.

But it’s highlighting a bigger, broader issue: most PMMs ways of testing their messaging is bad

So I went to the drawing board, and wrote a full-on crazy 47-pages on how I would test messaging with product marketing managers.

The formula I came up with is simple:

I created a mix of lead volume and pipeline velocity per messaging variant — shaped by the angles and gaps in your current positioning — to identify which message actually drives revenue, not just clicks.

And today, I’m inviting you to my message testing lab 🧪🔬

What I hope you get out of this newsletter edition:

Finally understand what “testing messaging” actually means

Curious to try the process themselves

Get inspired to do it yourself without a full research team or fancy tooling

Reach out to me so we can work together (👋)

Let’s get started!

What Messaging Testing Isn’t

Testing your messaging doesn’t mean running empty A/B tests or having your homepage headline always changing to show how many use cases/personas you can cover.

But the thing is, messaging is often:

❌ Untested (“we wrote a landing page and pray it works”)

❌ Over-optimized at the surface level (button tests, headline swaps)

Example of what I use to identify the problem

Team feedback is great, but it’s often misaligned and ignore the most important input: the one from the customers.

If you’re not talking to customers on a weekly basis, you absolutely need to test your messaging, otherwise you’re running your GTM blindly.

Running scattered A/B tests, random ads, and just talking with sales goes in a very dangerous category: Primitive testing.

And running A/B tests can be a good alternative, but you usually need a LOT of traffic to matter, and don’t reflect the qualitative aspect of messaging.

A/B testing origin go back to 1920s, and running these without having the big picture or approaching it from a scientific, yet practical method stops you from creating influence and control over new positioning.

This is most painful when I talk to solo PMMs and marketers, because you need to find a proper way to link messaging to revenue, without more time and resources at your disposition.

A common misconception about messaging testing is that it’s just about picking the “catchiest” headline or the most clever wording.

But there’s a few misconceptions on testing your messaging:

Messaging testing tells you the absolute truth

If it worked for this audience, it will work for all

A/B testing is the only way to test messaging

Once you’ve found the winner, you’re done

Good messaging testing answers “Does this resonate and motivate our target audience to take the next step?” rather than “Which sentence sounds nicer?”

But before testing your messaging, you should make sure it’s structured properly.

Structuring your Messaging Before Testing

Ok, so how do you properly test your messaging then? First, you need to make sure you have all of the right elements together.

Writing better copy won’t fix your messaging problem.

You need a system that makes copy modular.

Teams keep shipping one-offs: new page, new deck, new script. Each one starts from zero

Message houses, persona docs, and feature lists feel complete, but:

• They don’t connect strategy → product.

• They ignore jobs to be done.

• They can’t be remixed across channels.

• They treat messaging as words, not structure.

That’s where the messaging pyramid comes into play:

How to quickly adapt your microcopy across different channels

The ones on top are led by strategy, while the ones at the bottom are led by products.

Once it’s built, you can remix the layers across content, sales, and marketing.

🔺 Top of the Pyramid – Strategy-Led

These layers shape narrative, positioning, and market fit. They're foundational and abstract, driven by customer insights, beliefs, and strategic direction.

🔻 Bottom of the Pyramid – Product-Led

These layers are executional and tangible, often rooted in how your product works and the specific outcomes it delivers.

At a minimum, you should have the following 7 elements figured out for your messaging to test it.

1️⃣ Product Category: The market context or space your product competes in and how you define it.

2️⃣ JTBD (Jobs to Be Done): The key tasks or goals your customers are trying to accomplish when they choose your solution.

3️⃣ POV (Points of View): Belief-led statements that frame how you see the problem and why your approach is uniquely right.

4️⃣ Objections: Common doubts or concerns your audience may have that need to be addressed to build trust.

5️⃣ Signals: Observable pain signals or situations that indicate a customer might need your product.

6️⃣ Benefits: The specific, tangible improvements or outcomes users get from your features.

7️⃣ Capabilities & Features: The product’s capabilities or components that enable the promised value.

Once you have these, here’s how you can compare different elements to differentiate from the competition, with microcopy that reflect clearer that.

8 Steps to Test Your Messaging

Once your messaging is all structured in the pyramid, you can start following the 8 steps to test your messaging.

This is from the message testing newsletter collab I did with Alex Estner, on MRR Unlocked.

This is the shorter version of it, you can check out the full newsletter here:

1. Draw a clear hypothesis (and know why you're testing) and objective

Every messaging test should start with a clear reason.

Are leads dropping off before demos?

Are people misunderstanding what you do?

Are you expanding into a new segment?

→ This isn’t a guessing game.

You’re setting the expectation for what should improve if the messaging lands.

We recommend setting uplift objectives.

Examples of uplift objectives:

✅ Boost SQL conversion rates by 15-20% → YES, measurable within 2 weeks.

2. Choose your Audience & Channels based on Context

Messaging tests aren’t just for landing pages.

Run your messaging tests on the channels you are already running. This can be email outbound, LinkedIn outbound, social ads, search ads etc.

Here are channels you can think about:

→ Outbound (Email & LinkedIn & Cold Calls)

→ Social Ads (LinkedIn, Facebook)

→ Organic Content (LinkedIn posts)

It’s relevant that you test your different messaging variants in the right context.

So the different messaging variants need to be:

✅ adapted to the channel where you use them (e.g. Social ad copy vs. LinkedIn DM copy)

✅ The micro copies (DM, Website headline, Ad copy…) need to reflect the messaging variant

3. Choose different messaging angles

Test 2–3 meaningfully different messaging angles against each other.

Focus on what you’re emphasizing:

Are you framing the problem differently?

Are you highlighting a new benefit?

Are you repositioning the product for a different buyer?

Below you find 3 messaging variants: Each variant focuses on a different angle.

4. Define your Testing Budget

Allocate enough funds to get meaningful data and validate uplift objectives within the testing time frame, you should start with

Let’s assume we have the following 2-Week Test Objectives:

Increase SQL conversion rates by 15-20% (measurable via CRM)

Improve win rate by reducing objections related to differentiation (measurable via sales call analysis).

Ask yourself this question:

“Does the uplift in leads and potential revenue exceed at least 3x your testing budget?”

✅If yes:

Good! Just make sure you have enough to achieve your uplift objectives.

❌ If no:

Increase your testing budget to match or exceed the 3x on potential revenue.

5. Respect the qualified lead threshold

You don’t need hundreds of leads to validate messaging, but you do need enough right-fit conversations to spot patterns.

Aim for at least 8–12 qualified ICP leads per variant before jumping to conclusions. Anything below that risks false positives.

Why 8? That’s the lowest point where the early signal becomes somewhat stable — it’s not statistically significant, but it’s practically helpful.

Below that, patterns are too noisy to trust.

6. Analyze results to identify winning messaging variants

1️⃣ Start with volume:

→ Did one message generate more qualified leads than the other?

→ Was the difference meaningful (15–20% or more)?

→ Did it do that without inflating unqualified junk?

2️⃣ Then look at velocity:

→ Did one variant move leads through the funnel faster?

→ Did it shorten the time from first touch → demo → close?

→ Did it create momentum, not just clicks?

7. Debrief with sales and look for repeatable patterns

After the test, don’t just look at the numbers, ask:

Which message got people talking?

Were there fewer objections?

Did any prospects echo your phrasing back to you?

Did one message lead to bigger or faster deals?

This is where the qualitative signal becomes a strategic direction. If one variant repeatedly brings in better-fit leads who convert faster and “get it” sooner, you’re onto something.

8. Apply winning messaging on your GTM

Once you have your “battle-tested” messaging, you now have proof on how much it’s impacting your lead velocity and volume.

Just apply the winning versions to your go-to-market, and keep improving.

How I Actually Test Messaging (in 3 weeks)

Testing your messaging should happens in a real-life scenario, not with people paid to tell you what they like, instead of acting on it.

You need need permission or a research team, just the right lens to show your team what brings results. And that doesn’t mean you should break the bank, especially if you’re starting out.

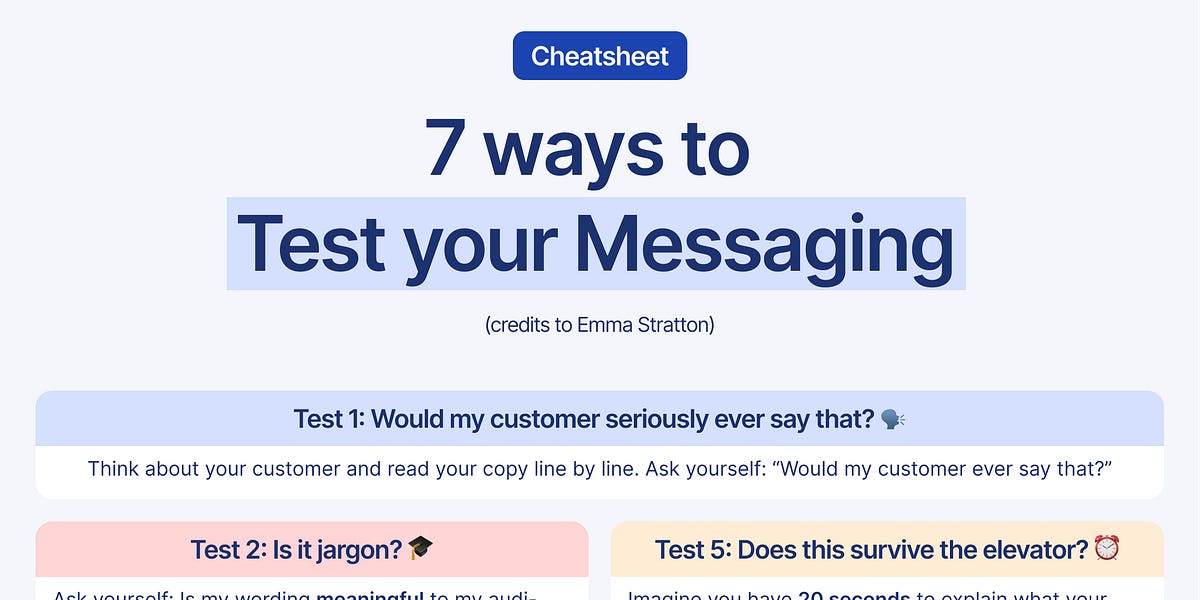

There’s great examples that are free, like the 7 ways to test your messaging by Emma Stratton

Free is good, but it won’t always show that quantifiable impact of your messaging, sometimes you need a scientific yet practical method.

Here’s a video explaining a premium system for PMMs and my testing ROI calculator:

That’s how you can gauge if it’s worth it to hire me, or simply test your messaging.

TLDR; I’ve build this framework with experimentation in mind, but also two main factors:

Lead volume + lead velocity

So if you’ve been trying to test your messaging, but you don’t have time to follow this process, I can help you with my offer in 3 weeks.

Who is it for? Product marketers and marketers in B2B SaaS, in sales-led GTM, running ads with 50 to 500 employees orgs.

Here’s how it works:

Week 1 - Hypothesis

We'll audit your existing positioning and messaging, and then run a stakeholder workshop to align on objectives and the message variants we'll be testing.

Week 2 - Testing

We'll write the microcopy for your variants, including ads and landing pages, before running them through our own proprietary testing system.

Week 3 - Analysis

We'll analyze the results from the testing and give you a detailed report outlining the most effective variant and giving you the messaging you need to roll it out.

Check out my website, and let me know if you want a free analysis of your messaging to see if you might need to test it.

So whether you’re building a way to test things out for free, or if you need something stronger, let’s chat.

That’s all for this time, happy testing! 👋